CDH安装

1. 准备工作

1.1 环境准备

个人电脑一台,操作系统需安装ssh、ftp工具

- 本次安装使用个人电脑使用Win10,ssh工具:xshell、ftp工具:xftp

服务器

主机名 物理内存(G) CPU核数(核) 数据磁盘(G) IP地址 hadoop1 6 2 60 192.168.120.101 hadoop2 4 3 60 192.168.120.102 hadoop3 4 3 60 192.168.120.103 操作系统:CentOS - 7 - x86 -Minimal

软件包下载

CentOS7.2 Packages

1

wget -r -p -np -k http://vault.centos.org/7.2.1511/os/x86_64/Packages/ (6G)

CDH Parcel和manifest文件、CM

1 2 3 4

wget http://archive.cloudera.com/cdh5/parcels/5.7.2/CDH-5.7.2-1.cdh5.7.2.p0.18-el7.parcel (1.3G) wget http://archive.cloudera.com/cdh5/parcels/5.7.2/CDH-5.7.2-1.cdh5.7.2.p0.18-el7.parcel.sha1 (41K) wget http://archive.cloudera.com/cdh5/parcels/5.7.2/manifest.json (49K) wget http://archive.cloudera.com/cm5/cm/5/cloudera-manager-centos7-cm5.7.2_x86_64.tar.gz(600M)

JDK:jdk-8u25

2. 基础环境搭建

2.1 修改IP

本次使用的是虚拟机,NAT模式,将Ip修改为静态模式,方便后续cdh配置

以hadoop1为例

1

2

3

4

5

6

7

8

9

10

11

vi /etc/sysconfig/network-scripts/ifcfg-eno16777728

# 修改或添加一下内容

BOOTPROTO="static"

IPADDR="你的静态IP"

PREFIX="24"

GATEWAY="网关地址"

DNS1="网关地址"

# 重启网卡

service network restart

2.2 HTTP软件仓库

这是一个可选项,安装后可不依赖网络,直接从本地软件仓库下载软件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

# 解压Package包

tar -zxvf centos

# 解压后目录结构:/data01/Packages

# 安装httpd软件

cd /data01/Packages

rpm -ivh apr-1.4.8-3.el7.x86_64.rpm

rpm -ivh apr-util-1.5.2-6.el7.x86_64.rpm

rpm -ivh httpd-tools-2.4.6-40.el7.centos.x86_64.rpm

rpm -ivh mailcap-2.1.41-2.el7.noarch.rpm

rpm -ivh httpd-2.4.6-40.el7.centos.x86_64.rpm

# 启动httpd,并设置开机启动

systemctl start httpd

systemctl enable httpd

# 安装createrepo仓库构建工具

cd /data01/Packages

rpm -ivh deltarpm-3.6-3.el7.x86_64.rpm

rpm -ivh python-deltarpm-3.6-3.el7.x86_64.rpm

rpm -ivh libxml2-python-2.9.1-5.el7_1.2.x86_64.rpm

rpm -ivh libxml2-2.9.1-5.el7_1.2.x86_64.rpm

rpm -ivh createrepo-0.9.9-23.el7.noarch.rpm

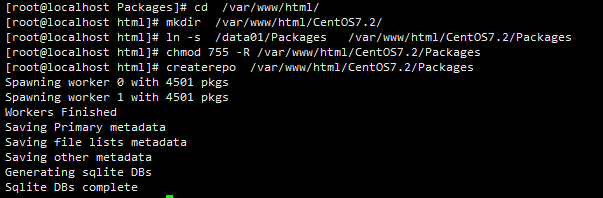

# 创建软链接

cd /var/www/html/

mkdir /var/www/html/CentOS7.2/

ln -s /data01/Packages /var/www/html/CentOS7.2/Packages

chmod 755 -R /var/www/html/CentOS7.2/Packages

# 关闭防火墙

setenforce 0

createrepo安装成功

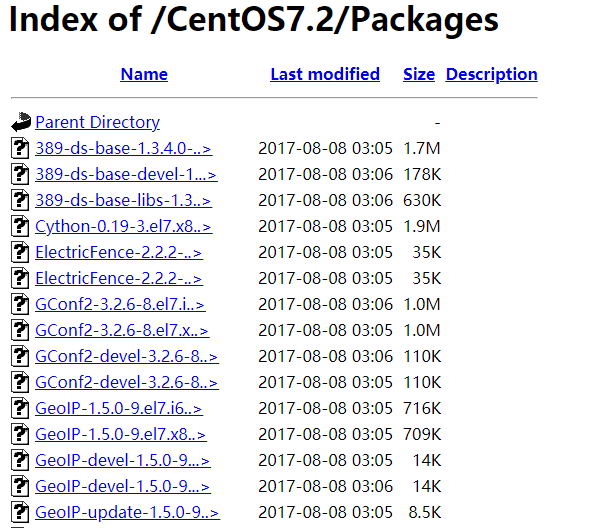

仓库搭建成功

2.2.1 配置repo

给所有直接配置repo源

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

# 备份默认文件

cd /etc/yum.repos.d/

mkdir bak

mv CentOS-*.repo bak/

# 创建源文件

vi base.repo

# 一下为文件内容

[base]

name=CentOS-Packages

baseurl=http://192.160.120.101/CentOS7.2/Packages/

gpgkey=

path=/

enabled=1

gpgcheck=0

# 检查是否配置成功

# 清理yum源

yum clean all

# 重构yum缓存

yum makecache

# 在yum源中找到vim

yum list|grep vim

2.3 修改主机名以及Host文件

在所有主机上进行操作

1

2

3

4

5

6

7

8

9

# 修改主机名

hostnamectl --static set-hostname hadoop 1.2.3

hostname

# 修改host

vi /etc/hosts

127.0.0.1 localhost

192.168.120.101 hadoop1

192.168.120.102 hadoop2

192.168.120.103 hadoop3

2.4 禁用SELinux

SELinux是Security Enhance Linux的缩写,是NASA开发的一套严格的资源权限管理系统,由于使用起来比较复杂,所以一般选择关闭

SELinux有三种模式:

- enforcing:强制模式

- permissive:宽容模式

- disabled:关闭模式

1

2

3

4

5

6

7

8

9

10

# 先临时关闭

setenforce 0

# 修改模式

sed -i 's/^SELINUX=.*/SELINUX=disabled/g' /etc/selinux/config

sed -i 's/^SELINUX=.*/SELINUX=disabled/g' /etc/sysconfig/selinux

# 重启生效

reboot

# 查看修改结果,显示为disable 为成功

cat /etc/selinux/config|grep SELINUX=

2.5 配置SSH免密登录

SSH免密登录可以帮助集群内部互相访问不需要密码,使用方式ssh username@ipaddress

配置过程:每台主机生成公钥和私钥,将所有主机的公钥(id_rsa.pub)写入到每台主机的authorized_keys,这样就实现了免密登录

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# 在所有主机中

# 备份ssh配置文件

cp /etc/ssh/sshd_config /etc/ssh/sshd_config.bak

# 修改配置文件

sed -i 's/\#RSAAuthentication/RSAAuthentication/g' /etc/ssh/sshd_config

# 修改重启服务

/bin/systemctl restart sshd.service 1>/dev/null

# 生成公钥和私钥,一直按回车就可以了

cd ~

ssh-keygen -t rsa

# 将所有的公钥发送到hadoop1

scp /root/.ssh/id_rsa.pub root@hadoop(1,2,3):/root/hadoop1.pub

# 在hadoop1中

# 将所有公钥写入authorized_keys

cat /root/*.pub >> /root/.ssh/authorized_keys

# 分发写好的授权文件到各台节点

scp /root/.ssh/authorized_keys root@hadoop(2,3):/root/.ssh/authorized_keys

2.6 时间同步

2.6.1 时区设置

1

2

3

4

5

# 所有节点

# 时区修改

timedatectl set-timezone "Asia/Shanghai"

# 时区查看

timedatectl

2.6.2 时间同步

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

# 在所有主机上

yum -y install ntp

# 在hadoop1中

# 备份原始配置文件&修改配置文件

cp /etc/ntp.conf /etc/ntp.conf.bak

vim /etc/ntp.conf

# 将一下内容注释掉

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

# 并在后面追加

restrict 192.168.120.101 mask 255.255.255.0 nomodify notrap

server 127.127.1.1

# 修改完成后退出,重启ntpd

systemctl restart ntpd

systemctl enable ntpd

# 在除hadoop1的其他主机上

# 注释掉相同内容,并添加一下代码

server 192.168.120.101 perfer

# 时间校队

ntpdate hadoop1

service ntpd start

ntpq -p

# 如果是Ubuntu

# 设置计划任务

crontab -e

# 写入如下内容

*/5 * * * * /usr/sbin/ntpdate 192.168.120.101 >/dev/null 2>&1

2.7 一些影响集群效率的配置

1

2

3

4

5

6

7

# 关闭THP

echo never > /sys/kernel/mm/transparent_hugepage/enabled

echo never > /sys/kernel/mm/transparent_hugepage/defrag

# 修改swappiness

echo "vm.swappiness=0" >> /etc/sysctl.conf

sysctl -p

cat /proc/sys/vm/swappiness

2.8 安装JDK

1

2

3

4

5

6

7

8

9

10

# 所有主机

# 首先将jdk包上传到/root目录下

cd /root

mkdir /usr/lib/java/

tar zxvf /root/jdk-8u25-linux-x64.tar.gz -C /usr/lib/java/

echo "export JAVA_HOME=/usr/lib/java/jdk1.8.0_25/" >>/etc/profile

echo 'export PATH=$PATH:$JAVA_HOME/bin/' >>/etc/profile

source /etc/profile

java -version

rm -rf /root/jdk-8u25-linux-x64.tar.gz

如果java -version 显示为openjdk,那就卸载掉openjdk

2.9安装MySql

1

2

3

4

5

6

7

8

# 在hadoop1上

# 安装mysql

yum -y install mariadb-server mariadb

# 启动并设置开机自启

systemctl start mariadb.service

systemctl enable mariadb.service

# 进入mysql 并设置密码,默认为空

mysql -u root -p

一下为mysql上的操作

show databases;

use mysql;

update user set password=password('123456') where user= 'root';

grant all privileges on *.* to root@'%' identified by '123456';

grant all privileges on *.* to root@'hadoop155' identified by '123456';

grant all privileges on *.* to root@'localhost' identified by '123456';

FLUSH PRIVILEGES;

将编码修改为utf-8

1

2

3

4

5

6

7

vim /etc/my.cnf

# 在[mysqld]下加入下面内容

character_set_server = utf8

# 重启数据库

systemctl restart mariadb

在MySql中创建CDH所需要的数据库

create database hive DEFAULT CHARSET latin1 COLLATE latin1_general_ci;

create database amon DEFAULT CHARSET utf8 COLLATE utf8_general_ci;

create database hue DEFAULT CHARSET utf8 COLLATE utf8_general_ci;

create database monitor DEFAULT CHARSET utf8 COLLATE utf8_general_ci;

create database oozie DEFAULT CHARSET utf8 COLLATE utf8_general_ci;

CREATE USER 'hive'@'localhost' IDENTIFIED BY 'hive';

GRANT ALL PRIVILEGES ON hive.* TO 'hive'@'localhost';

CREATE USER 'hive'@'%' IDENTIFIED BY 'hive';

GRANT ALL PRIVILEGES ON hive.* TO 'hive'@'%';

CREATE USER 'hive'@'hadoop1' IDENTIFIED BY 'hive';

GRANT ALL PRIVILEGES ON hive.* TO 'hive'@'hadoop1';

CREATE USER 'oozie'@'localhost' IDENTIFIED BY 'oozie';

GRANT ALL PRIVILEGES ON oozie.* TO 'oozie'@'localhost';

CREATE USER 'oozie'@'%' IDENTIFIED BY 'oozie';

GRANT ALL PRIVILEGES ON oozie.* TO 'oozie'@'%';

CREATE USER 'oozie'@'hadoop1'IDENTIFIED BY 'oozie';

GRANT ALL PRIVILEGES ON oozie.* TO 'oozie'@'hadoop1';

CREATE USER 'monitor'@'localhost' IDENTIFIED BY 'monitor';

GRANT ALL PRIVILEGES ON monitor.* TO 'monitor'@'localhost';

CREATE USER 'monitor'@'%' IDENTIFIED BY 'monitor';

GRANT ALL PRIVILEGES ON monitor.* TO 'monitor'@'%';

CREATE USER 'monitor'@'hadoop1'IDENTIFIED BY 'monitor';

GRANT ALL PRIVILEGES ON monitor.* TO 'monitor'@'hadoop1';

FLUSH PRIVILEGES;

在所有主机上安装mysql-connector驱动

1

2

# 安装完成后的目录 /usr/share/java/mysql-connector-java.jar

yum -y install mysql-connector-java

卸载依赖包

1

2

3

4

5

6

7

8

# 安装以后依赖包

yum -y install psmisc

yum –y install perl

yum install nfs-utils portmap

systemctl stop nfs

systemctl start rpcbind

systemctl enable rpcbind

至此,所有的基础环境已经搭建完成

接下来是CDH的搭建

3. CDH搭建

3.1 安装CM

所有主机上传CM相关包cloudera-manager-centos7-cm5.7.2_x86_64.tar.gz到硬盘/data01,也就是第一步下载的东西

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

# 所有节点

cd /data01

mkdir /opt/cloudera-manager

tar -zxvf /data01/cloudera-manager-centos7-cm5.7.2_x86_64.tar.gz -C /opt/cloudera-manager

# 授权

useradd --system --home=/opt/cloudera-manager/cm-5.7.2/run/cloudera-scm-server --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

usermod -aG wheel cloudera-scm

# 修改发送心跳的路径

sed -i "s/^server_host=.*/server_host=hadoop1/g" /opt/cloudera-manager/cm-5.7.2/etc/cloudera-scm-agent/config.ini

# 确认修改成 显示:server_host=hadoop1

cat /opt/cloudera-manager/cm-5.7.2/etc/cloudera-scm-agent/config.ini|grep server_host

# 设置数据库驱动(创建软连接)

ln -s /usr/share/java/mysql-connector-java.jar /opt/cloudera-manager/cm-5.7.2/share/cmf/lib/mysql-connector-java.jar

# hadoop1上

mkdir /var/cloudera-scm-server

chown -R cloudera-scm:cloudera-scm /var/cloudera-scm-server

chown -R cloudera-scm:cloudera-scm /opt/cloudera-manager

# 所有节点上

mkdir -p /opt/cloudera/parcels

chown cloudera-scm:cloudera-scm /opt/cloudera/parcels

# 在hadoop1上

# 上传CDH parcels

mkdir /opt/cloudera/parcel-repo

cp /data01/CDH-5.7.2-1.cdh5.7.2.p0.18-el7.parcel /opt/cloudera/parcel-repo/

cp /data01/CDH-5.7.2-1.cdh5.7.2.p0.18-el7.parcel.sha1 /opt/cloudera/parcel-repo/CDH-5.7.2-1.cdh5.7.2.p0.18-el7.parcel.sha

cp /data01/manifest.json /opt/cloudera/parcel-repo/

chown -R cloudera-scm:cloudera-scm /opt/cloudera/parcel-repo

ll /opt/cloudera/parcel-repo

# 初始化数据库

/opt/cloudera-manager/cm-5.7.2/share/cmf/schema/scm_prepare_database.sh mysql -hhadoop1 -uroot -p123456 --scm-host hadoop1 scmdbn scmdbu scmdbp

出现下图中的 All done,your SCM database is configured correctly! 则说明数据库配置正确

如果出现Access denied for user ‘root’@’localhost’ (using password: YES):

请检查/etc/hosts里面是否有 127.0.0.1 localhost,然后确认mariadb的数据库创建语句中权限分配是否正确。

如果出现Can’t create database ‘scmdbn’; database exists:

请登录mysql,删除该scmdbn数据库,再重新执行上面的初始化数据库的SQL命令,删除该数据库的SQL语句为:

drop database scmdbn;

启动CM server

1

2

3

4

5

6

7

# 在hadoop1上

/opt/cloudera-manager/cm-5.7.2/etc/init.d/cloudera-scm-server start

/opt/cloudera-manager/cm-5.7.2/etc/init.d/cloudera-scm-server status

# 在所有节点上

/opt/cloudera-manager/cm-5.7.2/etc/init.d/cloudera-scm-agent start

/opt/cloudera-manager/cm-5.7.2/etc/init.d/cloudera-scm-agent status

# 如果需要关闭CM,将start改成stop即可

卸载openJdk

1

2

3

4

5

6

# 查看是否安装openjdk

rpm -qa|grep java

# 卸载掉所有包含openjdk的东西

rpm -e --nodeps java-1.7.0-openjdk-1.7.0.9-2.3.4.1.el6_3.i686

rpm -e --nodeps java-1.6.0-openjdk-1.6.0.0-1.50.1.11.5.el6_3.i686

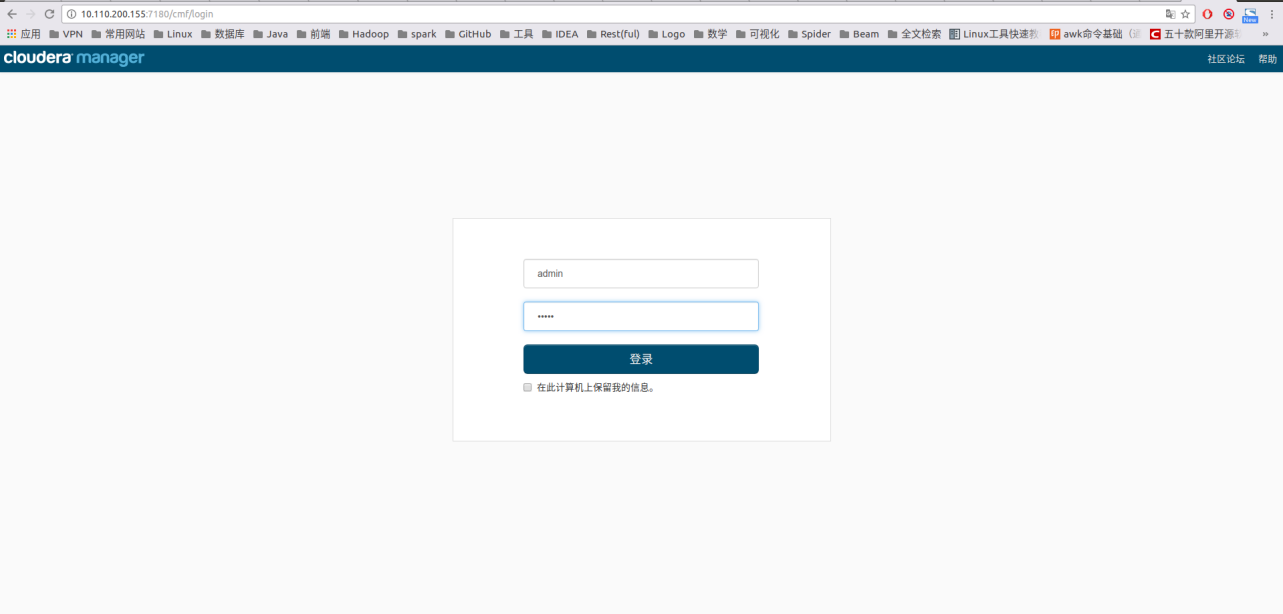

3.2 CDH安装

- 账号密码均为admin

- 在这里选择免费

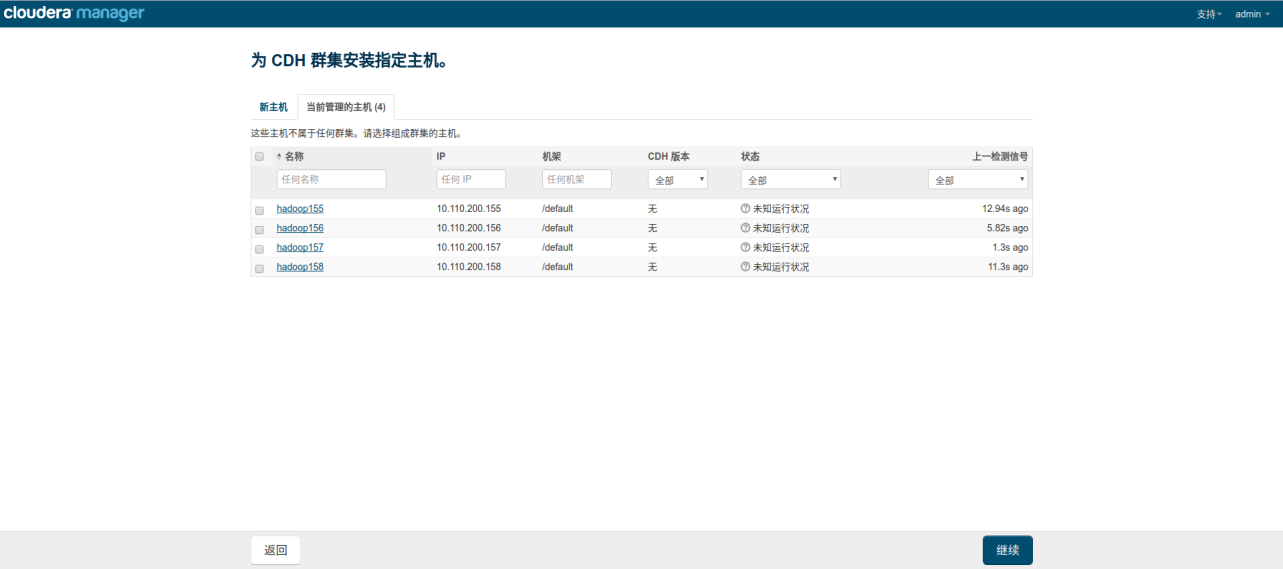

- 选择所有主机

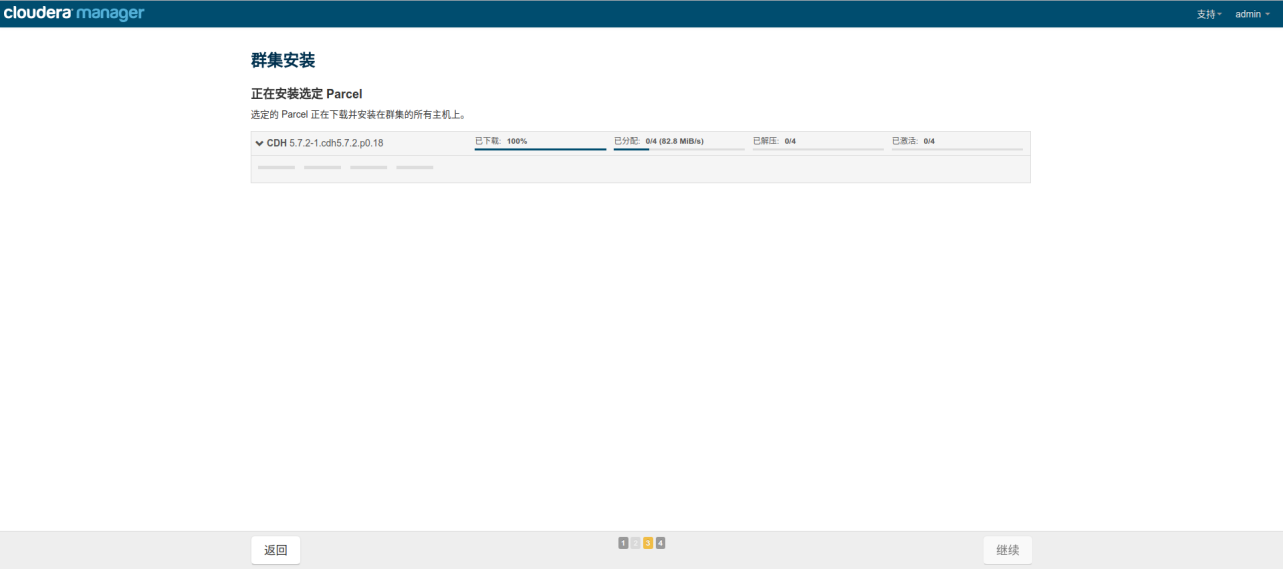

- 选择已经下载好的parcel

- 等待分配和安装

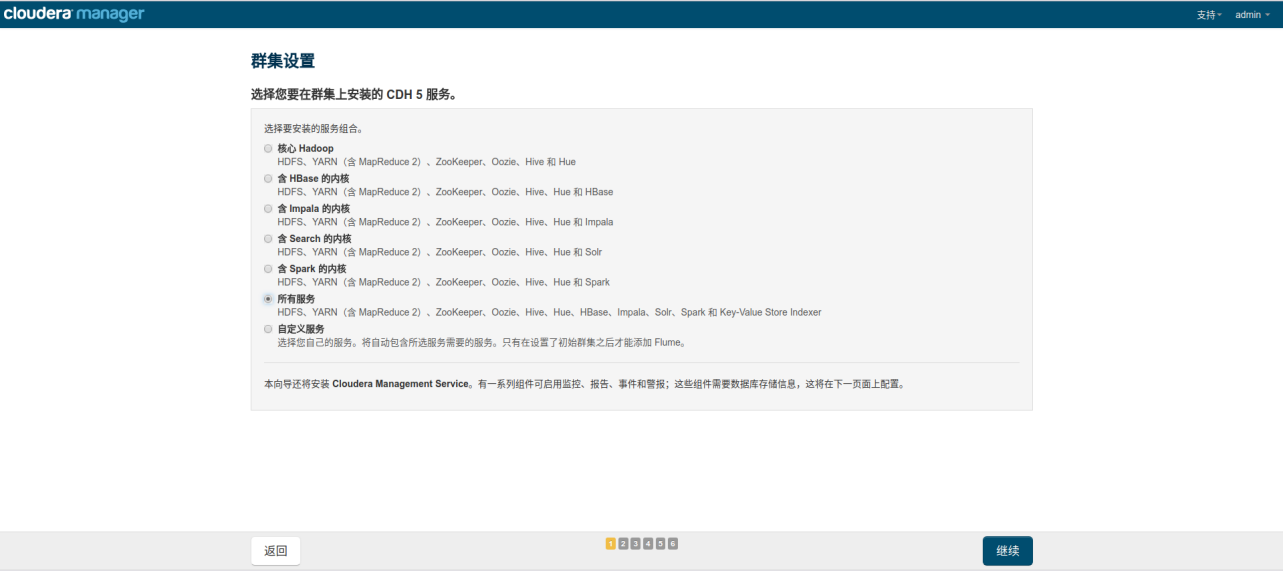

- 根据需求选择所需要的服务

- 根据规划选择相应的服务到不同的主机上去

- 一般来说,Master-Slave结构的服务Master只能安装到一台主机上(例如NameNode),而Slave则可以同时安装到多台(>=2)主机上(例如DataNode)

- NameNode和SecondaryNameNode不能安装在同一主机上,这两台主机的硬件配置需要一致

- 建议所有DataNode配置一致,并且DataNode主机都安装Yarn的RegionServer

- 各服务最好合理分配到不同节点,避免单个节点同时运行多种任务,导致负载过大

- 配置hive,oozie的数据库信息,填写之前已经写好的的账号密码即可

- 服务具体配置,默认即可,有需求的可以根据自己的需求进行更改

- 配置完成后,开始安装服务